Native Multipath TCP support for OpenSSH

During the Open Week at UCLouvain, we added native Multipath TCP support on the OpenSSH client and server in order to connect to a remote machine using multiple network interfaces.

What’s the point ?

There are several benefits to this native support :

- Improve the total bandwidth by combining the bandwidth of each interface.

- Move the ssh session from an interface to another without losing the connection.

- Keep the connection alive even if there is no interface connected during some time.

Compiling and installation

Follow the instructions shown in the README file.

Setting up mptcpd

mptcpd is a daemon that allows to automatically setup new interfaces with mptcp. It comes with a config file allowing to specify a mode or list of modes for each new mptcp address. Our tests were done using the ‘subflow’ mode.

Manual mptcp configuration

New addresses can be added manually using the ip command. To do so, please refer to the redhat documentation.

Prevent timeouts

Configure those options in sshd_config:

- ClientAliveInterval 60

- > Sends a packet to the client after 60 seconds of inactivity.

- ClientAliveCountMax 60

- > Closes the connection when 60 packets have been sent and no response have been received.

Usage

Clone this fork of openssh, compile on the mptcp_support branch and install, set the client and server config files:

- Uncomment UseMPTCP no in ssh_config and change to yes

- Uncomment UseMPTCP no in sshd_config and change to yes

Then run SSH as usual.

Alternatively, run the following commands from the directory where the compiled binaries are located:

On the server side:

$(pwd)/sshd -o UseMPTCP=yes

On the client side:

./ssh -o UseMPTCP=yes user@hostname

Real life testing

The first step to check our port of ssh to mptcp was to see in Wireshark if mptcp packets were being transmitted. Then, the real life testing can begin.

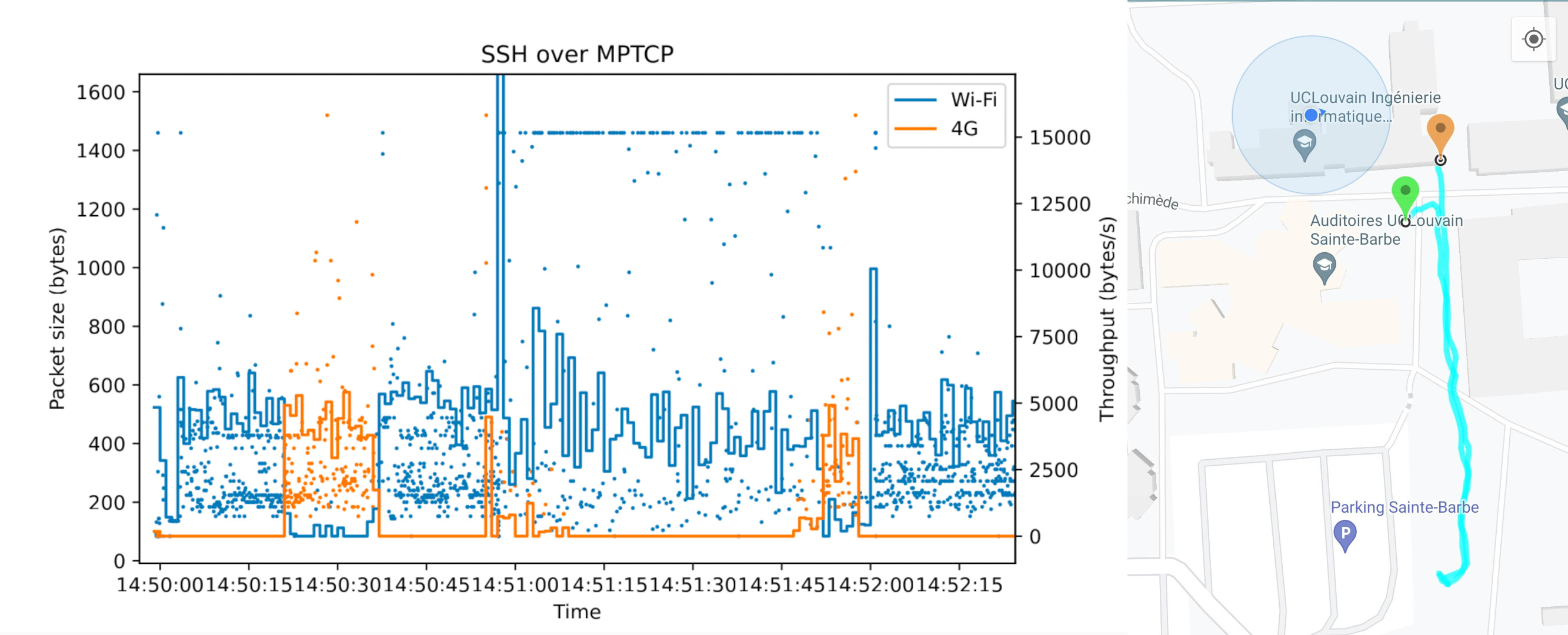

Here, at the UCLouvain, we have a lot of auditoriums and computer labs close to each other. The eduroam network is used accross buildings to give students access to the internet. This gave us the idea to walk from building to building, switching access point often and to keep a mobile 4G connection used by Multipath TCP. The goal was to see if an ssh session would break over time. You can see the path we have taken accross the city in the following picture:

Along with it, we have captured the packets on the client device to see which interface was used and when. In the plot, we have only selected the “interesting” part of the data when the connection switches between 4G and wifi. We see on the first part that the 4G took over. We think it is because there was a black zone in eduroam between the two first access points in our path. The same phenomenon can be seen a bit later.

At the end we noticed that the ssh connection was still alive even after switching interfaces multiple times. We can thus say from our experiment that the MPTCP port of ssh seems to be successful.

Apple Music on iOS13 uses Multipath TCP through load-balancers

Apple Music on iOS13 uses Multipath TCP through load-balancers

Since the publication of RFC6824 in 2013, interest in Multipath TCP has continued to grow and various use cases have been deployed. Apple uses Multipath TCP for its Siri voice recognition application since 2013 to support seamless handovers while walking. Tessares uses Multipath TCP to deploy Hybrid Access Networks that combine xDSL and LTE to provide faster Internet access services in rural areas. Samsung, LG and Huawei use Multipath TCP on their Android smartphones to combine Wi-Fi and 4G. Recently, 3GPPP has selected Multipath TCP to support Wi-Fi/5G co-existence in future 5G networks and a first prototype has been demonstrated.

Despite these growing deployments, web hosting companies and CDNs have complained that Multipath TCP was difficult to deploy because they assume that load balancers would need to be changed to terminate Multipath TCP connections.

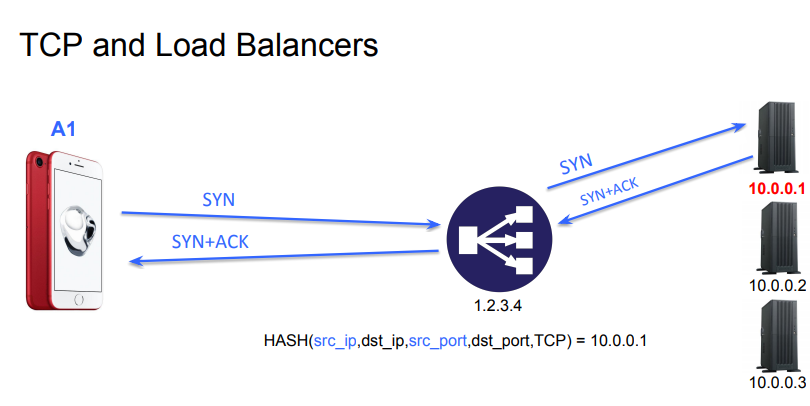

It turns out that it is possible to support Multipath TCP on servers with todays’ load-balancers. Fabien Duchêne proposed and evaluated this solution in the Making Multipath TCP Friendlier to Load-Balancers and Anycast paper that he presented at ICNP’17. A simpler load-balancer is illustrated in the figure below. The load-balancer uses IP address 1.2.3.4 and forwards connections to the servers behind it, selecting them e.g. based on a hash function.

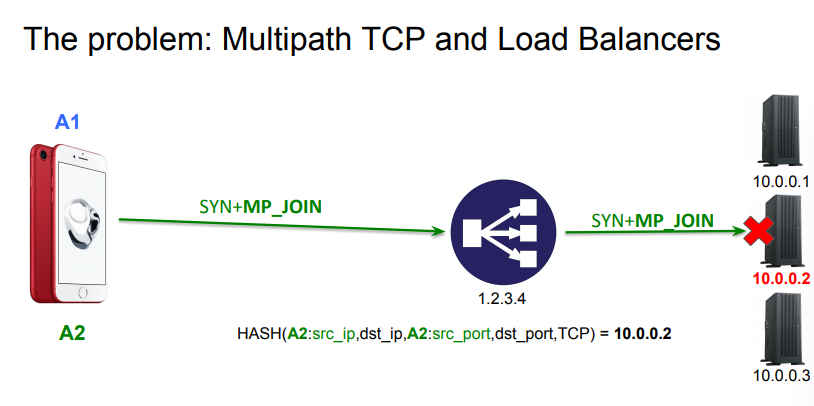

With Multipath TCP, this simple approach does not work anymore as the second subflow from the client could be hashed to a different server than the one of the initial subflow.

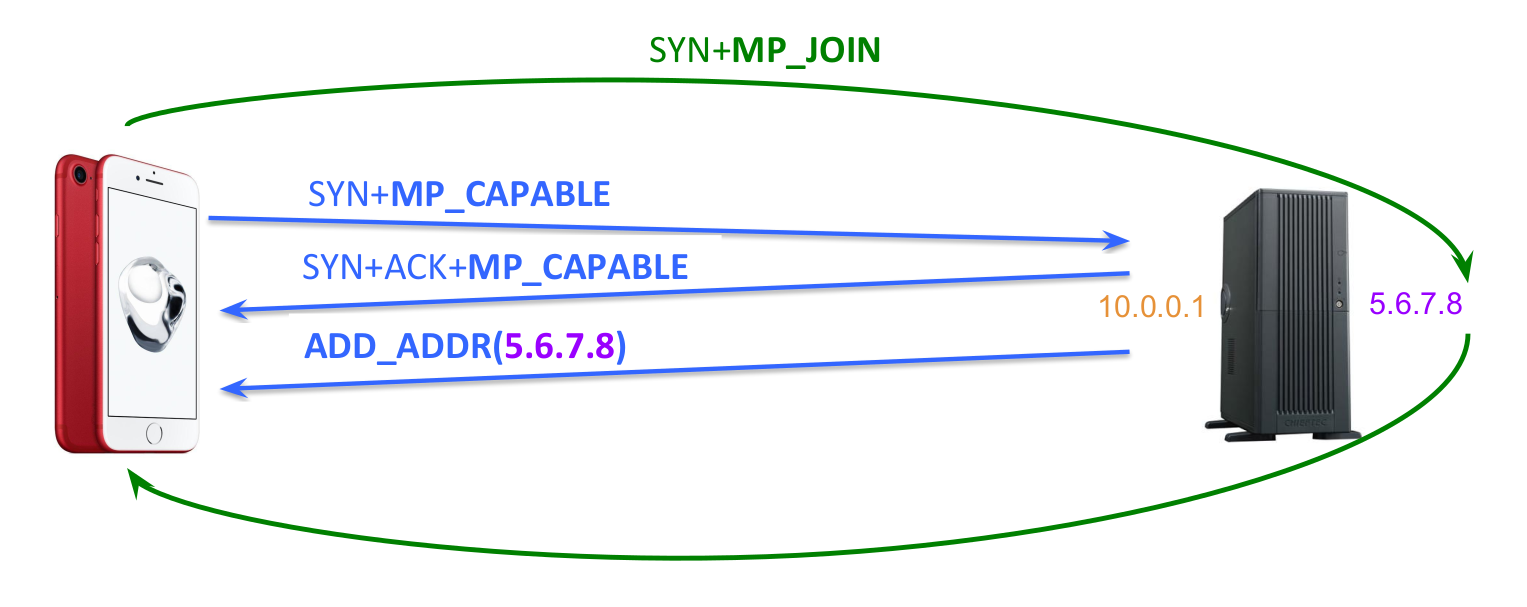

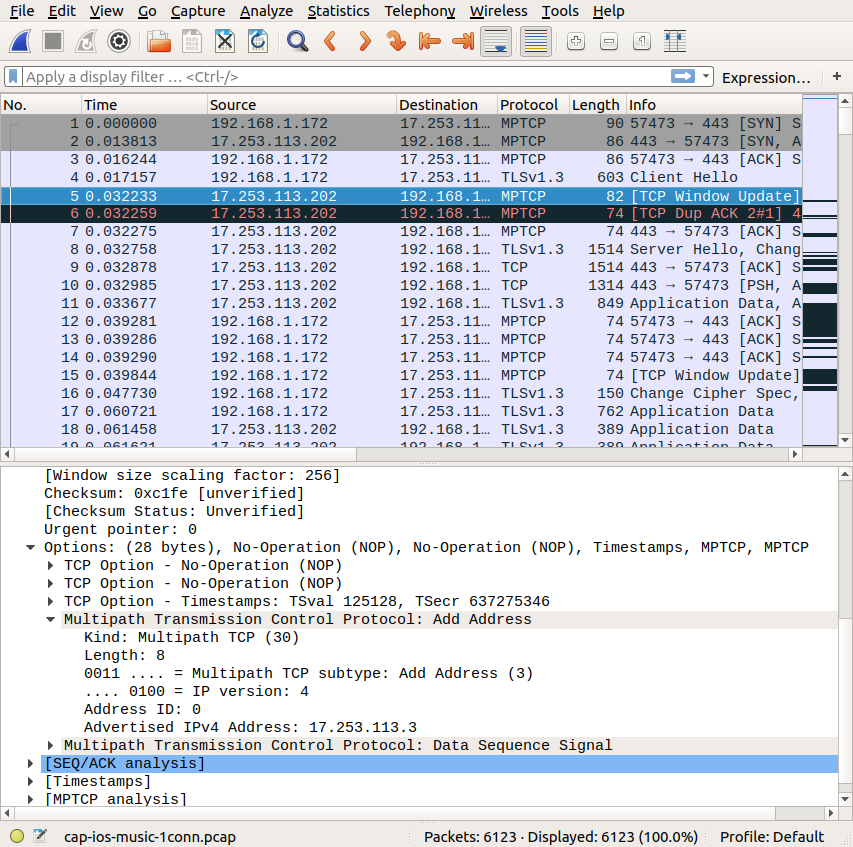

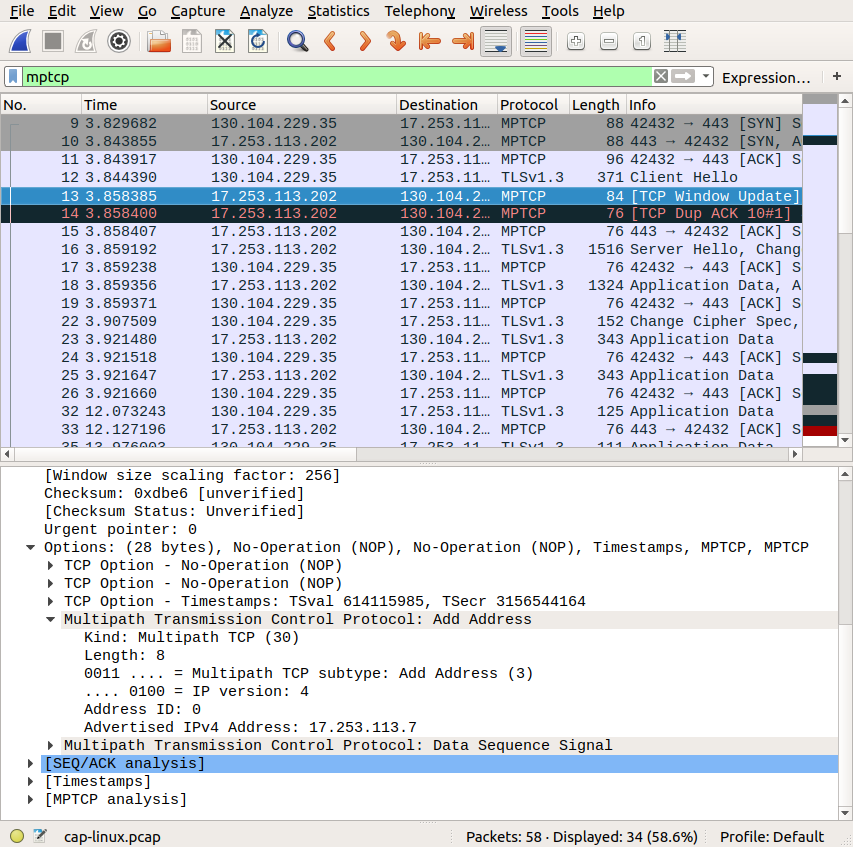

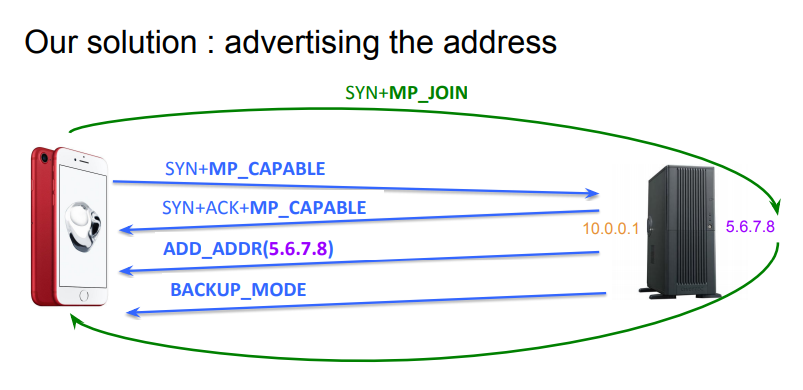

The solution proposed by Fabien Duchêne is both simple and efficient. The load-balancer has a public IP address that is advertised by the DNS. Furthermore, each server that resides behind this load balancer has it own public IP address. When a client contacts the load-balanced service, its SYN packet reaches the load-balancer that selects one of the servers in the pool. The server confirms the establishment of the connection using the load-balanced IP address. As soon as the Multipath TCP connection is established, the server sends a packet containing its own IP address inside an ADD_ADDR option. Thanks to this address, the client can establish the subsequent subflows directly towards the server address, effectively bypassing the load-balancer.

Given its benefits, this solution has been included in the standards-track version of Multipath TCP that is being finalised by the IETF. However, it had not yet been deployed at a large scale, until the release of iOS13 by Apple in September 2019. Given the benefits of Multipath TCP for Siri, Apple has decided to also use Multipath TCP for its Apple Maps and Apple Music applications. These two applications are often used while walking and thus suffered from interruptions during Wi-Fi/cellular handovers. A closer look at a packet trace collected from an iPhone using Apple Music shows interesting results.

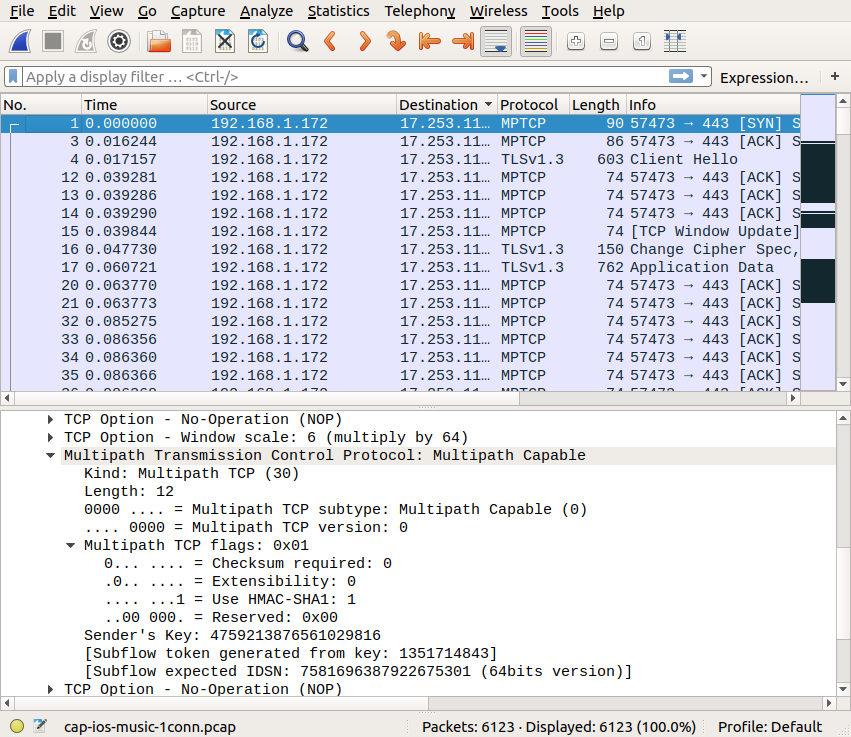

First, Apple Music uses Multipath TCP as shown by the options contained in the SYN packet. It is interesting to point out that both the iPhone and the server use the first version of Multipath TCP defined in RFC6824.

Once the connection has been established, the server sends quickly a packet that advertises its own address in the ADD_ADDR option.

A closer look at this option indicates that this address is advertised as having address identifier 0. According to RFC6824, this identifier is reserved for the address of the initial subflow. This advertisement thus changes the address of the initial subflow, and we can expect that iOS13 will use this new address to establish subsequent subflows on this Multipath TCP connection. We checked by contacting one of Apple Music servers from a Linux client to see how Linux reacts to such an option and it supports it correctly.

According to statista, there were more than 60 million subscribers of the Apple Music service in June 2019. As they upgrade their smartphones to support iOS13, we will observe a huge growth in Multipath TCP traffic during the coming weeks… If you see other servers or CDNs that enable Multipath TCP, let us know…

SOCKS and Multipath TCP

The main benefit of Multipath TCP is that it enables either the simultaneous utilisation of different paths or provides fast handovers from one path to another. Several deployments of Multipath TCP leverage these unique capabilities of multipath transport. When Apple opted for Multipath TCP in 2013, they could leverage its benefits by enabling it on both their iPhones and iPads and on the servers that support the Siri application. This is the end-to-end approach to deploying Multipath TCP.

However, there are many deployment scenarios where it would be beneficial to use Multipath TCP to interact with servers that have not yet been upgraded to support this new protocol. A first use case are the smartphones willing to combine Wi-Fi and cellular or quickly switch from one to another. Another use case is to combine different access networks to benefit from a higher bandwidth. In large cities, users can often use high bandwidth access links through VDSL, FFTH or cable networks. However, in rural areas, bandwidth is often limited and many home users or SMEs need to combine different access links to reach 10 Mbps or a small multiple of this low bandwidth. Multipath TCP has been used in these two deployments together with SOCKS RFC 1928.

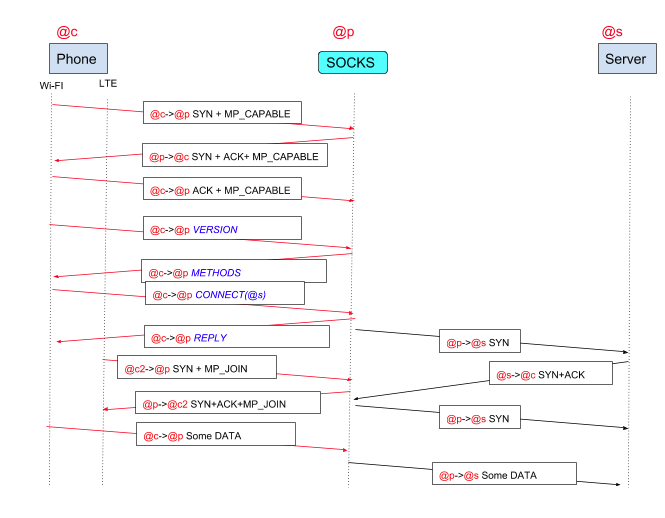

SOCKS is an old application layer protocol that was designed to allow users in a corporate network to access the Internet through a firewall that filters connections. Several implementations of SOCKS exist and a popular one is shadowsocks. In Soutch Korea, the smarpthones that use the Gigapath service interact through a SOCKS server over Multipath TCP as illustrated in the figure below.

This SOCKS service can be easily deployed once the Multipath TCP implementation has been ported on the smartphones. It appears as a simple application that interacts with the SOCKS server which is managed by the network operator. However, SOCKS is a chatty protocol that significantly increases the delay to establish a connection. To establish one TCP connection through the SOCKS proxy, the smartphone needs to exchange several packets as shown in the figure below.

First, the smartphone sends a SYN with the MP_CAPABLE option to create a connection to the SOCKS server. The SOCKS server replies and this consumes one round-trip-time. Then, the smartphone sends a SOCKS message to initiate the SOCKS sessions and find the methods that the SOCKS server supports. In some cases, an additional step is required to authenticate the client (not shown in the figure). Finally, the smartphone sends a CONNECT message to request the creation of a connection towards the final server. At this point, the smartphone can also create a subflow towards the SOCKS server. A benefit of some SOCKS deployment is that it can also encrypt all the data exchanged between the smartphone and the SOCKS server. This was important a few years ago, but less crucial today given the number of services that already use TLS.

A similar approach has been used to combine different access links. For example, OVH provides the overthebox that uses a SOCKS proxy running on an access router to combine several xDSL/cable/cellular links in a single pipe. The SOCKS proxy running on this router interacts with a SOCKS server as shown above. The code running on their access router is open-source and available from https://github.com/ovh/overthebox. OpenMPTCPRouter uses a similar approach.

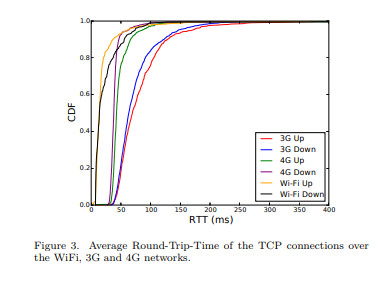

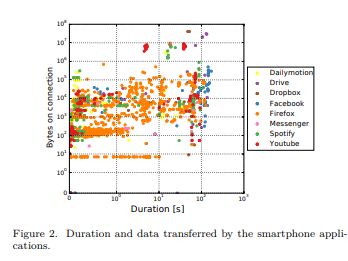

The main benefit of SOCKS in these deployment is that it enables the simultaneous utilization of different access links. This increases the throughput for long TCP connections as shown by measurements from speedtest and similar services. However, SOCKS has a major drawback: it increases the time required to establish all TCP connections by several round-trip-times between the client and the SOCKS server. This additional delay can be significant for applications that rely on short TCP connections. The figure below (source [CBHB16a]) shows that the round-trip-time on cellular networks can be significant.

Measurement carried on smartphones [CBHB16a] show that many applications use very short connections that exchange a small amount of data. Increasing the setup time of these connections by forcing them to be proxied by SOCKS may affect the user experience.

References

| [CBHB16a] | (1, 2) Quentin De Coninck, Matthieu Baerts, Benjamin Hesmans, and Olivier Bonaventure. A first analysis of multipath tcp on smartphones. In 17th International Passive and Active Measurements Conference, volume 17. Springer, March-April 2016. URL: https://inl.info.ucl.ac.be/publications/first-analysis-multipath-tcp-smartphones.html. |

Using load balancers in front of Multipath TCP servers

Load balancers play a very important role in today’s Internet. Most Internet services are provided by servers that reside behind one or several layers of load-balancers. Various load-balancers have been proposed and implemented. They can operate at layer 3, layer 4 or layer 7. Layer 4 is very popular and we focus on such load balancers in this blog post. A layer-4 load balancer uses information from the transport layer to load balance TCP connections over different servers. There are two main types of layer-4 load balancers :

- The stafeful load balancers

- The stateless load balancers

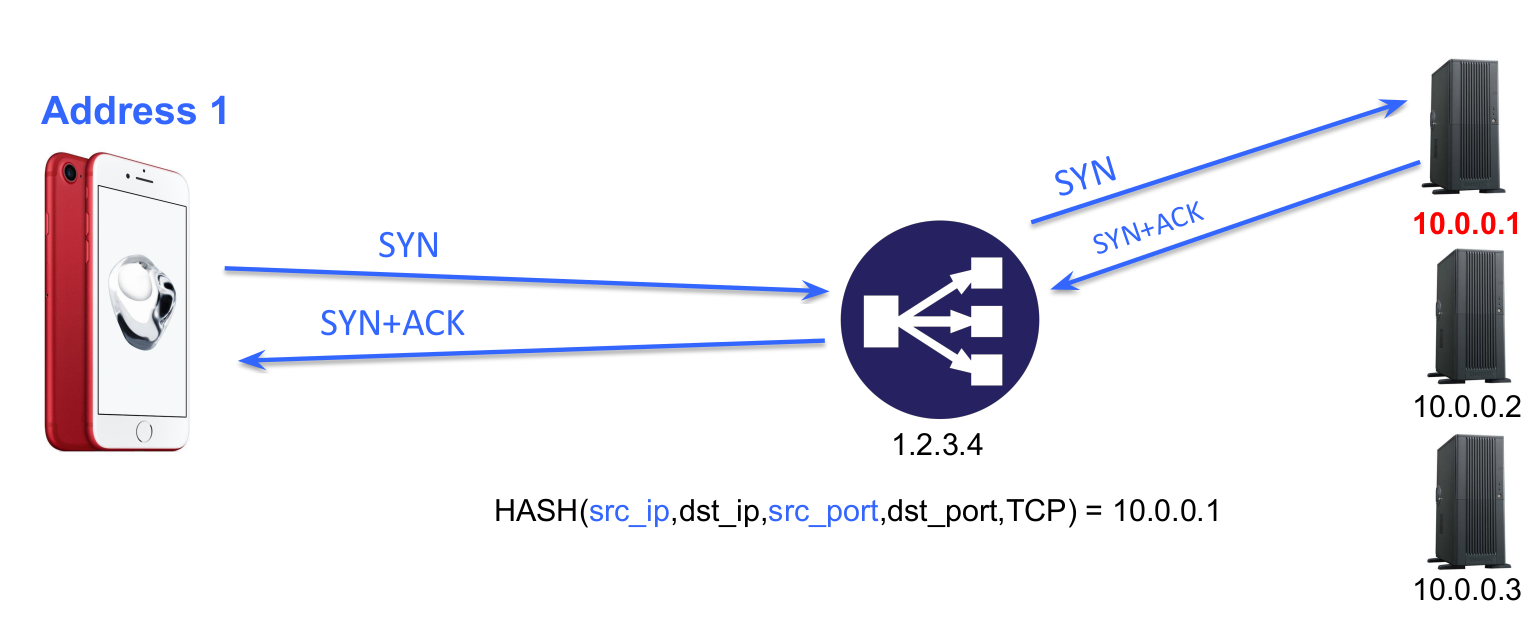

Schematically, a load balancer is a device or network function that processes incoming packets and forwards all packets that belong to the same connection to a specific server. A stateful load balancer will maintain a table that associates the five-tuple that identifies a TCP connection to a specific server. When a packet arrives, it seeks a matching entry in the table. If a match is found, the packet is forwarded to the selected server. If there is no match, e.g. the packet is a SYN, a server is chosen and the table is updated before forwarding the packet. The table entries are removed when they expire or when the associated connection is closed. A stateless load balancer does not maintain a table. Instead, it relies on hash function that is computed over each incoming packet. A simple approach is to use a CRC over the source and destination addresses and ports and associate each server to a range of CRC values. This is illustrated in the figure below (source: Fabien Duchene’s presentation of [DB17]).

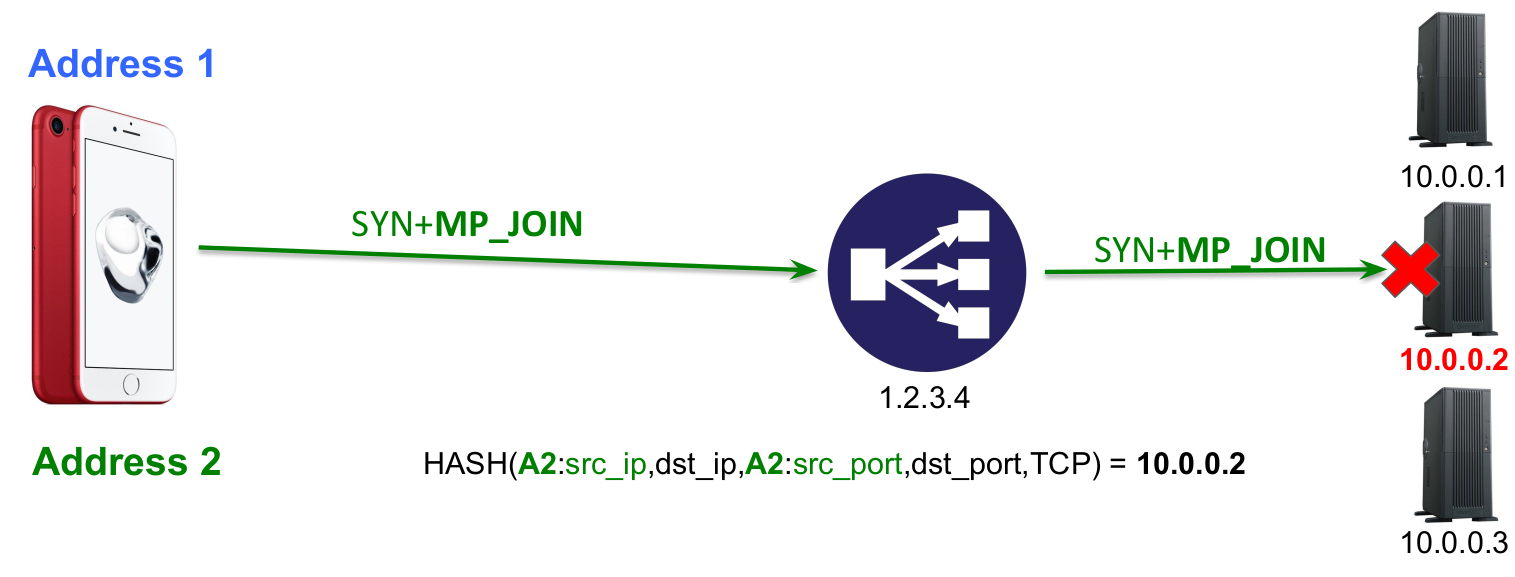

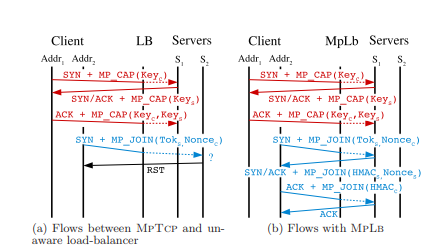

With Multipath TCP, a single connection can be composed of different subflows that have their own five tuples. This implies that that data corresponding to a given Multipath TCP connection can be received over several different TCP subflows that obviously need to be forwarded to the same server by the load balancer. This is illustrated in the figure below (source: Fabien Duchene’s presentation of [DB17]).

Commercial load balancers

Several approaches have been proposed in the literature to solve this problem. In Datacenter Scale Load Balancing for Multipath Transport [OR16], V. Olteanu and C. Raiciu proposed two different tricks to support stateless load balancers with Multipath TCP. First, the load balancer selects the key that will be used by the server for each incoming Multipath TCP connection. As this key is used to Token that identifies the Multipath connection in the MP_JOIN option, this enables the load balancer to control the Token that clients will send when creating subflows. This allows the load balancer to correctly associated MP_JOINs to the server that terminates the corresponding connection. This is not sufficient for a stateless load balancer. A stateless load balancer also needs to associate each incoming packet to a specific server. If this packet belongs to a subflow, it carries the source and destination addresses and ports, but those of a subflow have no relationship with the initial subflow. They solve this problem by encoding the identification of the server inside a part of the TCP timestamp option.

In Towards a Multipath TCP Aware Load Balancer [LienardyD16], S. Lienardy and B. Donnet propose a mix between stateless and stateful approaches. The packets from the first subflow are sent to a specific server by hashing their source and destination addresses and ports. They then extract the key exchanged in the third ack to store the token associated with this connection. This token is then placed in a map that is used to load balance the SYN MP_JOIN packets. The reception of an MP_JOIN packet forces the creation of an entry in a table that is used to map the packets from the additional subflows. This is illustrated in the figure below (source [LienardyD16])

A benefit of this approach is that since the second subflow is directly associated with the server, all the packets exchanged over this subflow can reach the server without passing through the load balancer.

In Making Multipath TCP friendlier to Load Balancers and Anycast, F. Duchene and O. Bonaventure leverage a feature of the forthcoming standard’s track version of Multipath TCP. In this revision, the MP_CAPABLE option has been modified compared to RFC6824. A first modification is that the client does not send its key anymore in the SYN packet. A second modification is the C that when when set by a server in the SYN+ACK, it indicates that the server will not accept additional MPTCP subflows to the source address and flows of the SYN. This bit was specifically introduced to support load balancers. It works as follows. When a client creates a connection, it sends a SYN towards the load balancer with the MP_CAPABLE option but no key. The load balancer selects one server to handle the connection, e.g. based on a stateless hash. Each server has a dedicated IP address or a dedicated port number. It replies to the SYN with a SYN+ACK that contains the MP_CAPABLE option with the C bit set. Once the connection is established, it sends an ADD_ADDR option with its direct IP address to the client. The client then uses the direct address to create the subflows and those can completely bypass the load balancer. The source code of the implementation is available from https://github.com/fduchene/ICNP2017

The latest Multipath TCP load balancer was proposed in Stateless Datacenter Load-balancing with Beamer by V. Olteanu et al. It assigns one port to each load balanced server and also forces the client to create the subflows towards this per-server port number. The load balancer is implemented in both software (click elements) and hardware (P4) and evaluated in details. The source code is available from https://github.com/Beamer-LB

Commercial load balancers such as F5 or Citrix also support Multipath TCP.

References

| [DB17] | (1, 2) Fabien Duchene and Olivier Bonaventure. Making multipath tcp friendlier to load balancers and anycast. In Network Protocols (ICNP), 2017 IEEE 25th International Conference on, 1–10. IEEE, 2017. URL: https://inl.info.ucl.ac.be/publications/making-multipath-tcp-friendlier-load-balancers-and-anycast.html. |

| [LienardyD16] | (1, 2) Simon Liénardy and Benoit Donnet. Towards a multipath tcp aware load balancer. In Proceedings of the 2016 Applied Networking Research Workshop, 13–15. ACM, 2016. |

| [OR16] | Vladimir Olteanu and Costin Raiciu. Datacenter scale load balancing for multipath transport. In Proceedings of the 2016 workshop on Hot topics in Middleboxes and Network Function Virtualization, 20–25. ACM, 2016. |