A first look at multipath-tcp.org : subflows

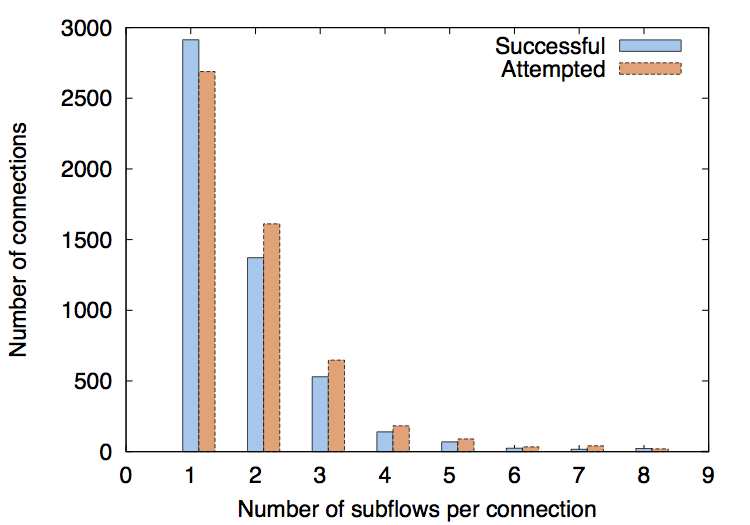

In theory, a Multipath TCP connection can gather an unlimited number of subflows. In practice, implementations limit the number of concurrent subflows. The Linux implementation used on the monitored server can support up to 32 different subflows. We analyse here the number of subflows that are established for each Multipath TCP connection. Since our server never establishes subflows, this number is an indication of the capabilities of the clients that interact with it.

The figure below provides the distribution of the number of subflows per Multipath TCP connection. We show the distribution for the number of successfully established subflows, i.e., subflows that complete the handshake, as well as for all attempted ones. As can be seen, several connection attempts either fail completely or establish less subflows than intended. In total, we observe 5098 successful connections with 8701 subflows. The majority of the observed connections (57%) only establish one subflow. Around 27% of them use two subflows. Only 10 connections use more than 8 subflows, which are omitted from the figure.

A first look at multipath-tcp.org : ADD_ADDR usage

The first question that we asked ourselves about the usage of Multipath TCP was whether the communicating hosts were using multiple addresses.

Since the packet trace was collected on the server that hosts the Multipath TCP implementation in the Linux kernel, we can expect that many Linux enthusiasts use it to download new versions of the code, visit the documentation, perform tests or verify that their configuration is correct. These users might run different versions of Multipath TCP in the Linux kernel or on other operating systems. Unfortunately, as of this writing, there is not enough experience with the Multipath TCP implementations to detect which operating system was used to generate specific Multipath TCP packets.

Thanks to the ADD_ADDR option, it is however possible to collect interesting data about the characteristics of the clients that contact our server. Over the 5098 observed Multipath TCP connections, 3321 of them announced at least one address. Surprisingly, only 21% of the collected IPv4 addresses in the ADD_ADDR option were globally routable address.

The remaining 79% of the IPv4 addresses found in the ADD_ADDR option were private addresses and in some cases link-local addresses. This confirms that Multipath TCP’s ability to pass through NATs is an important feature of the protocol RFC 6824.

The IPv6 addresses collected in the ADD_ADDR option had more diversity. We first observed 72% of globally routable IPv6 addresses. The other types of addresses that we observed are shown in the table below. The IPv4-compatible and the 6to4 IPv6 addresses were expected, but the link local and documentation addresses should have been filtered by the client and not be announced over Multipath TCP connections. The Multipath TCP specification RFC 6824 should be updated to specify which types of IPv4 and IPv6 addresses can be advertised over a Multipath TCP connection.

Address type Count Link-local (IPv4) 51 Link-local (IPv6) 241 Documentation only (IPv6) 21 IPv4-compatible IPv6 13 6to4 206

A first look at Multipath TCP traffic

The Multipath TCP website is a unique vantage point observe Multipath TCP traffic on the global Internet. We have recently collected a one-week long packet trace from this serverL. It has been collected using tcpdump and contains the headers of all TCP packets received and sent by the server hosting the Multipath TCP Linux kernel implementation. Apart from a web server, the machine also hosts an FTP server and an Iperf server. The machine has one physical network interface with two IP addresses (IPv4 and IPv6) and runs the stable version 0.89 of the Multipath TCP implementation in the Linux kernel.

To analyse the Multipath TCP connections in the dataset, we have extended the mptcptrace software . mptcptrace handles all the main features of the Multipath TCP protocol and can extract various statistics from a packet trace. Where necessary, we have combined it with tcptrace and/or its output has been further processed by custom scripts.

The table below summarizes the general characteristics of the dataset. In total, the server received around 136 million TCP packets with 134 GiBytes of data (including the TCP and IP headers) during the measurement period. As shown in the table (in the block Multipath TCP), a significant part of the TCP traffic was related to Multipath TCP. Unsurprisingly, IPv4 remains more popular than IPv6, but it is interesting to note that the fraction of IPv6 traffic from the hosts that are using Multipath TCP (9.8%) is bigger than from the hosts using regular TCP (3.7%). This confirms that dual-stack hosts are an important use case for Multipath TCP.

We have also studied the application protocols used in the multipath TCP traffic. Around 22.7% of the packets were sent or received on port 80 (HTTP) of the server. A similar percentage of packets (21.2%) was sent to port 5001 (Iperf) by users conducting performance measurements. The FTP server, was responsible for the majority of packets. It hosts the debian and ubuntu packages for the Multipath TCP kernel and is thus often used by Multipath TCP users.

In terms of connections, HTTP was responsible for 89.7% of the traffic, Iperf for 6.4%, and FTP control connections for 1.9% and the 2.0% higher ports and are probably FTP data connections.

All TCP Total IPv4 IPv6 # of packets [Mpkt] 136.1 128.5 7.6 # of bytes [GiByte] 134.0 129.0 5.0

Multipath TCP Total IPv4 IPv6 # of packets [Mpkt] 29.4 25.0 4.4 # of bytes [GiByte] 20.5 18.5 2.0

In subsequent posts, we will explore the packet trace and provide additional information about what we have learned about Multipath TCP when analysing it.

FlowBender : revisiting Equal Cost Multipath in Datacenters

Equal Cost Multipath (ECMP) is a widely used technique that allows routers and switches to spread the packets over several paths having the same cost. When a router/switch has several paths having the same cost towards a given destination, it can send packets over any of these paths. To maximise load-balancing, routers install all the available paths in their forwarding tables and balance the arriving packets over all of them. To ensure that all the packets that correspond to the same layer-4 flow follow the same path and thus have roughly the same delay, routers usually select the outgoing equal cost path by computing :  when n is the number of equal cost paths towards the packet’s destination and H a hash function. This technique works well in practice and is used in both datacenters and ISP networks.

when n is the number of equal cost paths towards the packet’s destination and H a hash function. This technique works well in practice and is used in both datacenters and ISP networks.

A consequence of this utilisation of ECMP is that TCP connections with different source ports between two hosts will sometimes follow different paths. In large ISP networks, this may lead to very different round-trip-times for different flows between a pair of hosts. In datacenters, is has been shown that Multipath TCP can better exploit the available network resources by load balancing TCP traffic over all equal cost paths. The ndiffports path manager was designed with this use case in mind.

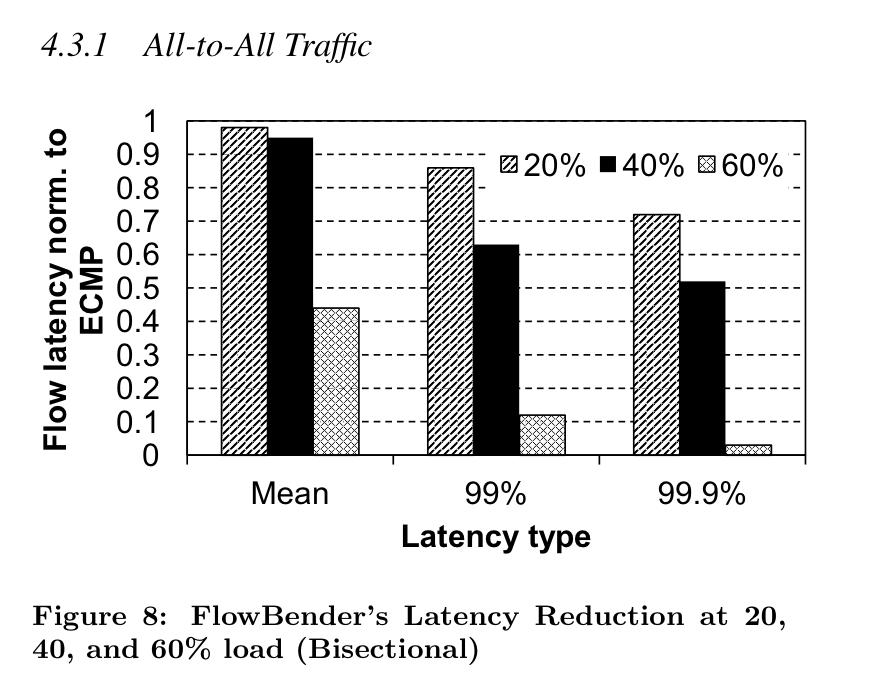

In a recent paper presented at Conext 2014, researchers from Google, Purdue University and Fabien Duchene propose another approach to allow TCP to efficiently utilise all paths inside a datacenter. Instead of using Multipath TCP to spread the packets from each connection over several paths (and risk increased delays due to reordering at the destination), they change the hash function used by the routers/switches. For this, they build upon the Smart hashing algorithm found in some broadcom switches. In an homogeneous datacenter that uses a single type of switches, they select the outgoing path as  where TTL is the Time-to-Live extracted from the packet. This is not the first load flow-based balancing strategy that uses the TTL. Another example is CFLB that even allows to control the path followed by the packets. In addition to using the TTL for load-balancing, the datacenter switches that they use support Explicit Congestion Notification and set the CE bit when their buffer growths. They then modify the TCP sources to react to congestion events. When a source receives a TCP acknowledgement that indicates congestion, it simply reacts by changing the TTL of all the packets sent over this connection. As illustrated in the figure below, this improves the flow termination time under higher loads.

where TTL is the Time-to-Live extracted from the packet. This is not the first load flow-based balancing strategy that uses the TTL. Another example is CFLB that even allows to control the path followed by the packets. In addition to using the TTL for load-balancing, the datacenter switches that they use support Explicit Congestion Notification and set the CE bit when their buffer growths. They then modify the TCP sources to react to congestion events. When a source receives a TCP acknowledgement that indicates congestion, it simply reacts by changing the TTL of all the packets sent over this connection. As illustrated in the figure below, this improves the flow termination time under higher loads.

In homogeneous datacenters, the FlowBender approach is probably a viable solution. However, Multipath TCP continues to have benefits in public datacenters where the endhosts cannot influence the operation of the routers and switches.