mptcptrace demo

In this post we will show a small demonstration of mptcptrace usage. mptcptrace is available from http://bitbucket.org/bhesmans/mptcptrace.

The traces used for these examples are available from /data/blogPostPCAP.tar.gz . This is the first post of a series of 5 posts.

Context

We consider the following use case:

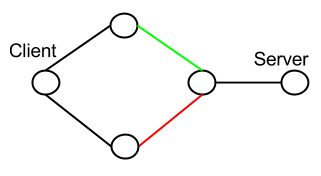

We have the client on the left that has two interfaces and two links to the server.

The green and red links each have well defined delay, bandwidth and loss rate. These links are respectively only used by the first interface of the client and the second interface of the client.

For our experiments, the client pushes a given amount of data at a given rate (at the application level) to the server.

For all the experiments the client sends 15000kB of data at 400kB/s. Experiments should always last at least 150/4 = 37s.

We use the fullmesh path manager for all the experiments. We also set the congestion control scheme to lia for all the experiments.

The rmem for all the experiments is set to 10240 87380 16777216

All this topologies are within mininet.

We analyze the client trace with mptcptrace with the following command:

mptcptrace -f client.pcap -s -G 50 -F 3 -a

but xplot files are not always easy to convert to png files, so we use:

mptcptrace -f client.pcap -s -G 50 -F 3 -a -w 2

to output csv files instead of xpl files and we parse them with R scripts.

First experiment

| Green: |

|

|---|---|

| Red: |

|

| Client: |

|

We collect the trace of the connection and generate the following graphs

To get a first general idea, we can take a look at the sequence/acknowledgments graph

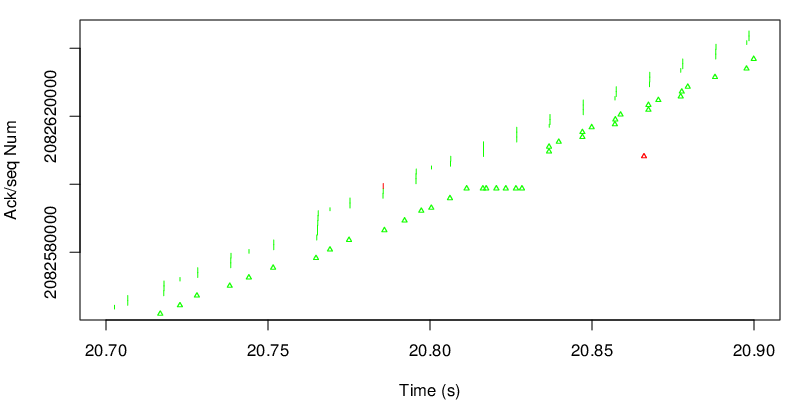

The graph is composed of vertical lines for each segments sent and small triangles for each ack. The bottom of a vertical line is the start of the MPTCP map and the top of is the end of the MPTCP map. Since there are many segments and acks, it looks like a simple line, however the zoom bellow shows the segments and the acks. The color depends on the path. Here most of the data goes through the green path because the default MPTCP scheduler always choose the path with the smallest RTT as long as there is enough space in the congestion window. Because the green path can support the sending rate of the application alone, MPTCP does not need to use the red path. However we can see a small difference at more or less 20 seconds. Let’s zoom on this part.

On this zoom we can see that MPTCP decides to send one segment on the red path. This may be due to a loss on the green subflow. Because the red path has a higher delay, segments sent by the green subflow after the red segment will arrive before at the client. In other words, green data will arrive out of sequence at the receiver. We see a series of duplicate MPTCP green acks before a jump in the acks. We can also see that the red ack takes into account data that has not been sent on the red subflow. This is an indication that some green segments arrive before the red segment. We can also observe that the red ack arrives late and acks data that are already acked at the MPTCP layer. This is due to delay on the way back for the red ack. Nevertheless this ack still carries a usefull ack for the TCP layer.

On the next figure, we take a look at the evolution of the goodput.

The cyan line shows the average goodput since the beginning of the connection while the blue line shows the average goodput over the last 50 acks (see the mptcptrace parameter). The blue line represents a more instantaneous value for the goodput. We could have a more instantaneous view by reducing the value of the G parameter in the mptcptrace command.

Multipath TCP through a strange middlebox

Users of the Multipath TCP implementation in the Linux kernel perform experiments in various networks that the developpers could not have access to. One of these users complained that Multipath TCP was not working in a satellite environment. Such networks often contain Performance Enhancing Proxies (PEP) that “tune” TCP connections to improve their performance. Often, those PEPs terminate TCP connections and the MPTCP options sent by the client never reach the server. This was not the case in this network and the user complained that Multipath TCP did not advertise the addresses of the server. Fortunately, he managed to capture a packet trace on both the client and the server. An analysis of this packet trace gives interesting insights on the impact of such PEPs on TCP extensions.

The network topology is very simple. The client has two private interfaces (client1 and client2), both behind NATs and the server has two public IP addresses. In the trace below we replace the private IP addresses of the client by client1 and client2. Its public IP address is replaced by client and the two server addresses are server1 and server2.

The client opens a TCP connection towards the server.

09:27:12.316613 IP (tos 0x0, ttl 64, id 15494, offset 0, flags [DF], proto TCP (6), length 72)

client1.47862 > server1.49803: Flags [S], cksum,

seq 3452765235, win 28440, options [mss 1422,sackOK,TS val 55654581 ecr 0,

nop,wscale 8,mptcp capable {0x69ccde41dca19b8f}], length 0

09:27:13.318852 IP (tos 0x0, ttl 64, id 15495, offset 0, flags [DF], proto TCP (6), length 72)

client1.47862 > server1.49803: Flags [S], cksum,

seq 3452765235, win 28440, options [mss 1422,sackOK,TS val 55655584 ecr 0,

nop,wscale 8,mptcp capable {0x69ccde41dca19b8f}], length 0

This is a normal TCP SYN segment with the MSS, SACK, timestamp and MP_CAPABLE options. The second packet does not seem to reach the server. The first is translated by the NAT and received as follows by the server.

09:27:22.729048 IP (tos 0x0, ttl 47, id 15494, offset 0, flags [DF], proto TCP (6), length 72)

client.47862 > server1.49803: Flags [S], cksum,

seq 3452765235, win 384, options [mss 1285,sackOK,TS val 55654581 ecr 0,

nop,wscale 8,mptcp capable {0x69ccde41dca19b8f}], length 0

There are several interesting points to observe when comparing the two packets. First, the MSS option is modified. This is not unusual but indicates a middlebox on the path. Note that the window is severely reduced (384 instead of 28440). The server replies with a SYN+ACK.

09:27:22.729220 IP (tos 0x0, ttl 64, id 0, offset 0, flags [DF], proto TCP (6), length 72)

server1.49803 > client.47862: Flags [S.], cksum,

seq 3437506945, ack 3452765236, win 28560, options [mss 1460,sackOK,

TS val 155835098 ecr 55654581,nop,wscale 8,

mptcp capable {0x32205e67a94ad606}], length 0

This segment is also modified by the middlebox. It updates the MSS, window, but does not change the timestamp chosen by the server.

09:27:14.188324 IP (tos 0x0, ttl 51, id 0, offset 0, flags [DF], proto TCP (6), length 72)

server1.49803 > client1.47862: Flags [S.], cksum,

seq 3437506945, ack 3452765236, win 384, options [mss 1285,sackOK,

TS val 155835098 ecr 55654581,nop,wscale 8,

mptcp capable {0x32205e67a94ad606}], length 0

Since the MP_CAPABLE option has been received in the SYN+ACK segment, the client can confirm the utilisation of Multipath TCP on this connection. This is done by placing the MP_CAPABLE option in the third ack.

09:27:14.188574 IP (tos 0x0, ttl 64, id 15496, offset 0, flags [DF], proto TCP (6), length 80)

client1.47862 > server1.49803: Flags [.], cksum,

seq 1, ack 1, win 112, options [nop,nop,TS val 55656453 ecr 155835098,

mptcp capable {0x69ccde41dca19b8f,0x32205e67a94ad606},

mptcp dss ack 3426753824], length 0

This segment is received by the server as follows.

09:27:23.456784 IP (tos 0x0, ttl 47, id 15495, offset 0, flags [DF], proto TCP (6), length 80)

client.47862 > server1.49803: Flags [.], cksum,

seq 1, ack 1, win 384, options [nop,nop,TS val 55654655 ecr 155835098,

mptcp capable {0x69ccde41dca19b8f,0x32205e67a94ad606},

mptcp dss ack 3426753824], length 0

The middlebox has updated the window and the timestamp but it did not change anything in the MP_CAPABLE option and Multipath TCP is confirmed on both the client and the server. The server sends immediately a duplicate acknowledgement containing the ADD_ADDR option to announce its second address.

09:27:23.456960 IP (tos 0x0, ttl 64, id 60464, offset 0, flags [DF], proto TCP (6), length 68)

server1.49803 > client.47862: Flags [.], cksum,

seq 1, ack 1, win 112, options [nop,nop,TS val 155835826 ecr 55654655,

mptcp add-addr id 3 server2,mptcp dss ack 2495228045], length 0

Unfortunately, this segment never reaches the client. As the current path managers do not retransmit the ADD_ADDR option on a regular basis, the client is never informed of the second address.

Since the client also has a second address, it tries to inform the server by sending a duplicate acknowledgement.

09:27:14.188636 IP (tos 0x0, ttl 64, id 15497, offset 0, flags [DF], proto TCP (6), length 68)

client1.47862 > server1.49803: Flags [.], cksum,

seq 1, ack 1, win 112, options [nop,nop,TS val 55656453 ecr 155835098,

mptcp add-addr id 4 client2,mptcp dss ack 3426753824], length 0

This segment never reaches the server. It is likely that the PEP notices that the segment is a duplicate acknowledgement and filters them. Maybe a solution to enable Multipath TCP to correctly pass through this particular middlebox would be place the ADD_ADDR option inside segments that contain data or use techniques to ensure their reliable delivery as proposed in Exploring Mobile/WiFi Handover with Multipath TCP

Note that the Multipath TCP options are correctly transported in other packets. For example, here is the first data segment sent by the server.

09:27:23.466575 IP (tos 0x0, ttl 64, id 60465, offset 0, flags [DF], proto TCP (6), length 107)

server1.49803 > client.47862: Flags [P.], cksum,

seq 1:36, ack 1, win 112, options [nop,nop,TS val 155835836 ecr 55654655,

mptcp dss ack 2495228045 seq 3426753824 subseq 1 len 35,nop,nop], length 35

This segment is received by the client as follows.

09:27:14.987619 IP (tos 0x0, ttl 51, id 60465, offset 0, flags [DF], proto TCP (6), length 107)

server1.49803 > client1.47862: Flags [P.], cksum,

seq 1:36, ack 1, win 320, options [nop,nop,TS val 155835173 ecr 55656453,

mptcp dss ack 2495228045 seq 3426753824 subseq 1 len 35,nop,nop], length 35

The middlebox has modified the timestamp and windows but did not change the Multipath TCP options.

The client can also send data to the server.

09:27:14.988371 IP (tos 0x0, ttl 64, id 15499, offset 0, flags [DF], proto TCP (6), length 93)

client1.47862 > server1.49803: Flags [P.], cksum,

seq 1:22, ack 36, win 112, options [nop,nop,TS val 55657253 ecr 155835173,

mptcp dss ack 3426753859 seq 2495228045 subseq 1 len 21,nop,nop], length 21

The server receives this segment as follows.

09:27:24.320654 IP (tos 0x0, ttl 47, id 15497, offset 0, flags [DF], proto TCP (6), length 93)

client.47862 > server1.49803: Flags [P.], cksum,

seq 1:22, ack 36, win 320, options [nop,nop,TS val 55654742 ecr 155835836,

mptcp dss ack 3426753859 seq 2495228045 subseq 1 len 21,nop,nop], length 21

It is interesting to compare the acknowledgement sent by the client for this segment.

09:27:14.987885 IP (tos 0x0, ttl 64, id 15498, offset 0, flags [DF], proto TCP (6), length 60)

client1.47862 > server1.49803: Flags [.], cksum,

seq 1, ack 36, win 112, options [nop,nop,TS val 55657253 ecr 155835173,

mptcp dss ack 3426753859], length 0

with the acknowledgement that the server actually receives.

09:27:23.487569 IP (tos 0x0, ttl 237, id 15496, offset 0, flags [DF], proto TCP (6), length 68)

client.47862 > server1.49803: Flags [.], cksum,

seq 1, ack 36, win 320, options [nop,eol], length 0

The server receives the acknowledgement within 21 msec of the transmission of the data segment. Furthermore, it has a TTL of 237 while the acknowledgement sent by the client had a TTL of 64. Since both packets have a different IPv4 id, it is very likely that the acknowledgement was generated by the PEP and not copied from the client. Note that the middlebox has replaced the second nop option with an eol option. A closer look at the packet reveals something even stranger. The IPv4 packet is 68 bytes long while it contains an IPv4 header (20 bytes), a TCP header (20 bytes) and the nop and eol options, both one byte long. The packet contains thus 26 bytes of garbage (starting with 0c1e below) :

0x0010: baf6 c28b cdcd 0434 cce4 31a5

0x0020: c010 0140 1515 0000 0100 0c1e 69cc de41

0x0030: dca1 9b8f 0a08 0101 0351 3902 0949 ddbc

0x0040: 0000 0000

The value of the TCP Data Offset (c, i.e. 48 bytes) indicates that the middlebox considers that bytes 0c1e ... 0000 belong to the TCP options, but since they appear after the eol option, they are ignored by the TCP stack on the receiver.

This actually removes the timestamp option and the DSS option. Removing the timestamp option was possible according to RFC 1323, but this behaviour is not anymore permitted with RFC 7323. The removal of the DSS option is a problem for Multipath TCP since there is no data acknowledgement. Fortunately, RFC 6824 and the Multipath TCP implementation in the Linux kernel have predicted this problem. Indeed, this ack acknowledges new data without containing a DSS option and Multipath TCP immediately fallsback to regular TCP. This preserves the connectivity at the cost of losing the benefits of Multipath TCP.

A similar problem happens in the other direction. The server has stopeed using Multipath TCP and sends the following packet.

09:27:24.320870 IP (tos 0x0, ttl 64, id 60466, offset 0, flags [DF], proto TCP (6), length 52)

server1.49803 > client.47862: Flags [.], cksum,

seq 36, ack 22, win 112, options [nop,nop,TS val 155836690 ecr 55654742], length 0

This ACK does not contain any DSS option. It is processed by the middlebox that removes the timestamp option.

09:27:15.787814 IP (tos 0x0, ttl 254, id 60466, offset 0, flags [DF], proto TCP (6), length 68)

server1.49803 > client1.47862: Flags [.], cksum,

seq 36, ack 22, win 320, options [nop,eol], length 0

Again note that the change in the TTL indicates that the middlebox has created a new packet to convey the acknowledgement to the client. At this point, the client fallsback to regular TCP as well as shown by the next segment that it sends.

09:27:15.788047 IP (tos 0x0, ttl 64, id 15500, offset 0, flags [DF], proto TCP (6), length 844)

client1.47862 > server1.49803: Flags [P.], cksum,

seq 22:814, ack 36, win 112, options [nop,nop,TS val 55658053 ecr 155835173], length 792

The transfer continues like a regular TCP connection. Note that the TCP timestamps are back. This strange middlebox shows that the objective of preserving connectivity in the presence of middleboxes is well met by the Multipath TCP implementation in the Linux kernel

Text updated on February 2nd and February 3rd based on comments from Raphael Bauduin and Gregory Detal

Simplifying Multipath TCP configuration with Babel

Multipath TCP builds its sub-flows based on pairs of the client’s and server’s IP addresses. When a host is connected to two different providers, it should have one IP address associated to each provider, which allows Multipath TCP to effectively use these two paths simultaneously. However, with a single default route, packets will follow the same path, independently of their source address, which prevents Multipath TCP from working properly.

The Multipath TCP website provides a recipe for configuring multiple routes manually on a single host directly connected to multiple providers using policy routing. Even for such simple topology, the procedure consists of 7 commands. Analogous configuration for a more complex network, while possible, is exceedingly tedious, error-prone and fragile.

Consider for example the following topology, where routers A and B are connected to distinct providers, and the host H is connected to router C. Routers A and B have default routes for packets sourced in 10.1.1.0/24 and 10.1.2.0/24 respectively.

0.0.0.0/0 --- A ----- B --- 0.0.0.0/0

from 10.1.1.0/24 \ / from 10.1.2.0/24

\ /

\ /

C

|

|

H

A manual configuration of this network will require an intervention on at least routers A, B and C. If for some reason the topology changes, a link fails or a router crashes, the network will fail to work correctly until an explicit intervention of the administrator.

Routing configuration of complex networks should not be done manually. That is the role of a dynamic routing protocol.

The source-specific version of the Babel routing protocol RFC 6126 will dynamically configure a network to route packets based both on both their source and destination addresses. Babel will learn routes installed in the kernel of the gateway routers (A and B), announce them through the network, and install them in the routing tables of the routers. Only the edge gateways need manual configuration to teach Babel which source prefix to apply to the default route they provide. A contrario, internal routers (C) will not need any manual configuration. In our example, we need to add the following directives to the Babel configuration file (/etc/babeld.conf) of the two gateways:

On router A:

redistribute ip 0.0.0.0/0 eq 0 src-prefix 10.1.1.0/24

redistribute ip 0.0.0.0/0 eq 0 proto 3 src-prefix 10.1.1.0/24

On router B:

redistribute ip 0.0.0.0/0 eq 0 src-prefix 10.1.2.0/24

redistribute ip 0.0.0.0/0 eq 0 proto 3 src-prefix 10.1.2.0/24

The second line specifies proto 3 because Babel does not redistribute proto boot routes by default.

The case of IPv6 is similar. In fact, it may be simpler, because Babel recognises kernel-side source-specific routes: in the best case, no additional configuration may be needed in IPv6.

The source-specific version of Babel works on Linux, and is available in OpenWRT as the babels package. You can also retrieve its source code from:

git clone https://github.com/boutier/babeld

Additional information about the Babel protocol and its operation with Multipath TCP may be found in the technical report entitled Source-specific routing written by Matthieu Boutier and Juliusz Chroboczek

Useful Multipath TCP software

Since the first release of the Multipath TCP implementation in the Linux kernel, various software packages have been written by project students and project contributors. Some of this software is available from the official Multipath TCP github repository. The core packages are :

- The Multipath TCP patch for the Linux kernel

- The Multipath TCP extension for the net-tools

- The Multipath TCP extension for ip-route

In addition to these packages, the repository contains several ports for specific platforms including the Raspberry PI and several smartphones. However, most of these ports were based on an old version of the Multipath TCP kernel and have not been maintained.

Extensions to tcpdump and wireshark were initially posted as patches. The basic support for Multipath TCP is now included in these two important packages. Extensions are still being developed, e.g. https://github.com/teto/wireshark

Besides these core packages, several other open-source packages can be very useful for Multipath TCP users and developers :

- tracebox is a flexible traceroute-like package that allows to detect middlebox interference inside a network. It can be used to verify whether packets containing any of the MPTCP options pass through firewalls. The source code for tracebox is available from https://github.com/tracebox/tracebox

- mptcp-scapy is an extension to the popular scapy packet manipulation software that supports Multipath TCP. It was recently updated and can be obtained from https://github.com/Neohapsis/mptcp-abuse

- MPTCPtrace is the equivalent for Multipath TCP of the popular tcptrace packages. It parses libpcap files and extracts both statistics and plots from the packet capture. This is one of the best way to analyse the dynamics of Multipath TCP in a real network.

- MBClick is a set of Click elements that reproduce the behaviour of various types of middleboxes. It proved to be very useful while developing the middlebox support in Multipath TCP.

- packetdrill is a software that allows to test precisely the behaviour of a TCP implementation. It has been extended to support Multipath TCP and a set of tests has been developed : https://github.com/ArnaudSchils/packetdrill_mptcp

- MPTCP vagrant is a set of scripts that can be used to automate the creation of Multipath TCP capable virtualbox images.