A closer look at the scientific literature on Multipath TCP

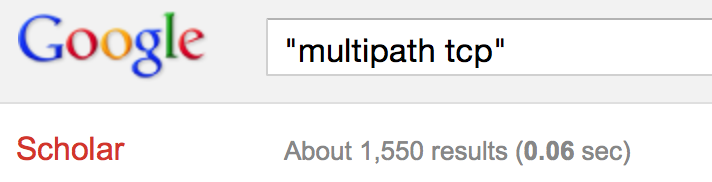

Some time ago, @ben_pfaff sent a tweet indicating that he was impressed by a google scholar search that returns more than 1,000 hits for “open vswitch”. This triggered my curiosity and I wondered what was the current impact of Multipath TCP in the scientific literature.

Google scholar clearly indicates that there is a Multipath TCP effect in the academic community. For the “Multipath TCP” query, google scholar already lists more than 1500 results.

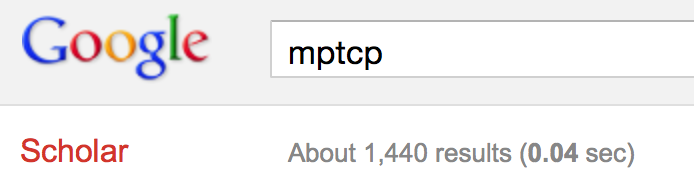

For the “mptcp” query, google scholar reports a similar number of documents.

This is a clear indication that there is a growing number of researchers who are adopting Multipath TCP and propose extensions and improvements to it. The researchers who start to work on Multipath TCP will need to understand an already large set of articles. As a first step towards a detailed bibliography on Multipath TCP, I’ve started to collect some notes on IETF documents and scientific paper on this topic. This is a work in progress that will be updated every time I find some time to dig into older papers or new interesting papers are published.

The current version of the annotated bibliography will always be available from https://github.com/obonaventure/mptcp-bib This repository contains all the latex and bibtex files and could be useful to anyone doing research on Multipath TCP. The pdf version (mptcp-bib.pdf) will also be updated after each major change for those who prefer pdf documents.

Interesting Multipath TCP talks

Various tutorials and trainings have been taught on Multipath TCP during the last years. Some of these have been recorded and are available via youtube.com.

The most recent video presentation is the talk given by Octavian Purdila from intel at the netdev’01 conference In this talk, Octavian first starts with a brief tutorial on Multipath TCP targeted at Linux networking kernel developpers and then describes in details the structure of the current code and the plans for upstreaming it to the official Linux kernel.

https://www.youtube.com/watch?v=wftz2cU5SZs

A longer tutorial on the Multipath TCP protocol was given by Olivier Bonaventure at IETF’87 in Berlinin August 2013.

https://www.youtube.com/watch?v=Wp0Kr3B64tA

Christoph Paasch gave a shorter Multipath TCP tutorial earlier during FOSDEM’13 in Brussels.

https://www.youtube.com/watch?v=wvO0bcWgXCs

Earlier, Costin Raiciu and Christoph Paasch gave a one hour Google Research talk on the design of the protocol and several use cases.

Costin : google tech talk

https://www.youtube.com/watch?v=02nBaaIoFWU

The Google Research talk was given a few days after the presentation of the USENIX NSDI’12 paper that received the community award. This presentation is available from the USENIX website.

mptcptrace demo, experiment five

This is the fifth post of a series of five. Context is presented in the first post. The second post is here. The third post is here. The fourth post is here.

Fifth experiment

| Green at 0s: |

|

|---|---|

| Green at 5s: |

|

| Green at 15s: |

|

| Red: |

|

| Client: |

|

Last experiment of this series, we come back to the third experiment, and instead of adding a 1% loss rate after 15 seconds of the red subflow, we change the MPTCP scheduler and we use the round robin. It is worth to note that the round robin scheduler still respects the congestion window of the subflows.

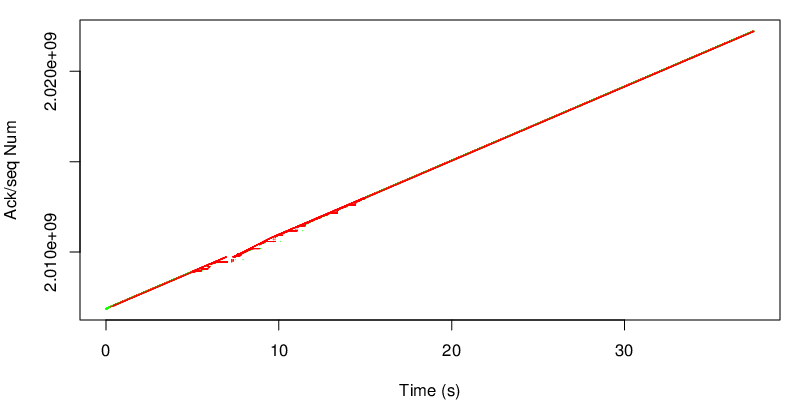

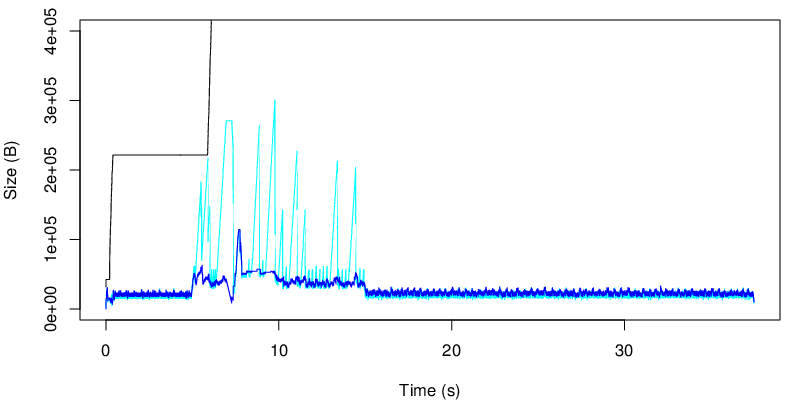

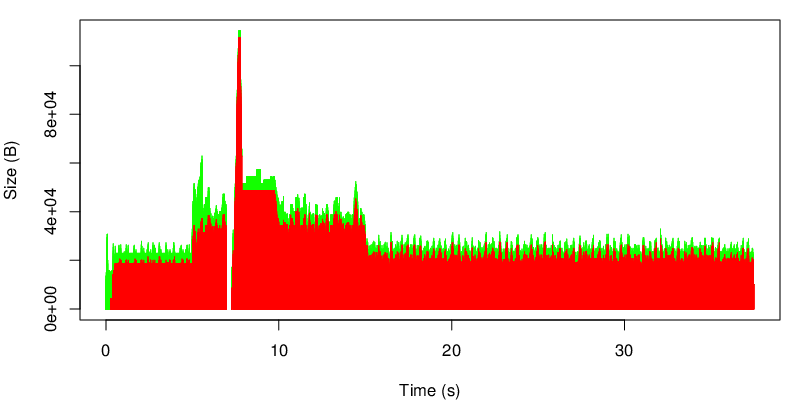

Let’s see the evolution of the sequence number :

The first thing that we can see are the small steps between 5 and 15 seconds. We also have the impression that we use more the red subflow but if we zoom :

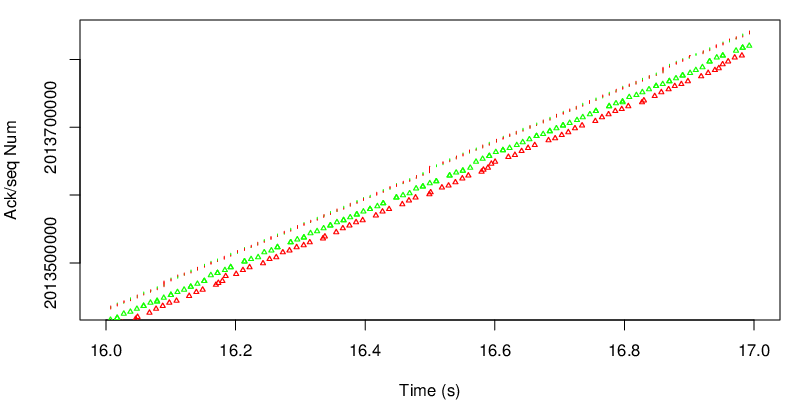

we can confirm that we use both subflows. We see 3 lines:

- The segments, because they are sent together at the sender, red and green subflows do not form two separate lines

- The green acks : we can see that they are closer to the segment line

- The red acks : we can see that all red acks are late from the MPTCP point of view. This is normal since the green delay is shorter.

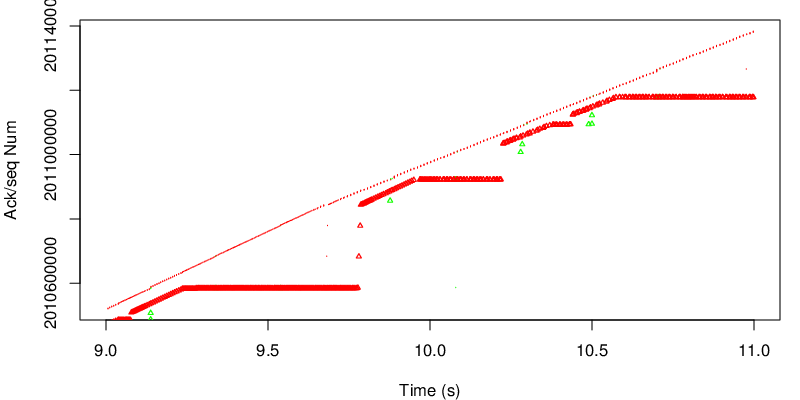

If we look at the evolution of the sequence number between 5 and 15 seconds, we can observe a series of stairs.

If we take a close at one of the this stairs :

because the green subflow is lossy during this period, we have reordering. Because we use the round robin scheduler, MPTCP still decides to send some data over the green path.

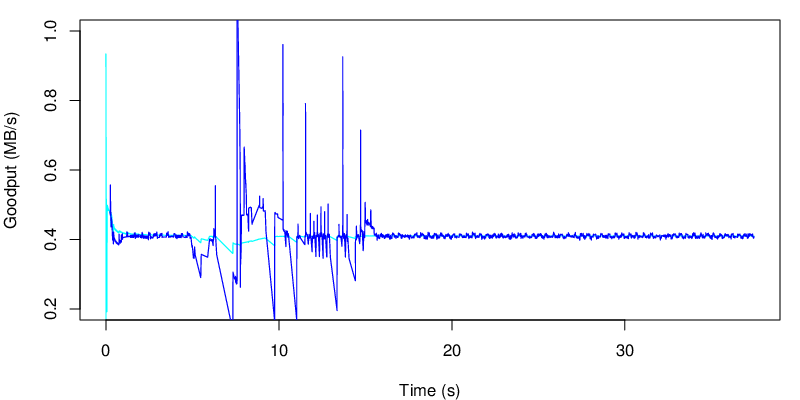

If we now take a look at the evolution of the goodput :

We can see the perturbation of the lossy link over the “instantaneous” goodput.

However the impact on the average goodput is somehow mitigated. Depending of the application, these variations may be problematic or not.

If we take a look at the evolution of the MPTCP unacked data, we see a lot of variations during the period 5 to 15 seconds. This is due to the reordering that happens during this period. This is not a big issue as long as the receive window is big enough to absorb these variations. In some scenarios this may be an issue if the window is too small. We may also remark that MPTCP may use more memory in this case on the receiver due to the buffer auto-tuning.

Finally, we can take a look at the evolution of the unacked data at the TCP level.

We can observe that we use both subflows during the whole connection but losses between 5 and 15 seconds on the green subflow leads to a bigger usage of the red subflow during this period.

Conclusion

This ends the series of posts that shows some basic MPTCP experiments. mptcptrace has been used to get the values out of the traces and R scripts have been used to produce the graphs. However we did not really post process the data in R. We have more experiments and visualizations that we will present later.

Why are there sometimes long delays before the establishment of MPTCP connections ?

Multipath TCP users sometimes complain that Multipath TCP connections are established after a longer delay than regular TCP connections. This can happen in some networks and the culprit is usually a middlebox hidden on the path between the client and the server. This problem can easily be detected by capturing the packets on the client with tcpdump Such a capture looks like :

11:24:05.225096 IP client.59499 > multipath-tcp.org.http:

Flags [S], seq 270592032, win 29200, options [mss 1460,sackOK,

TS val 7358805 ecr 0,nop,wscale 7,

mptcp capable csum {0xaa7fa775d16fa6bf}], length 0

The client sends a SYN with the MP_CAPABLE option… Since it receives no answer, it retransmits the SYN.

11:24:06.224215 IP client.59499 > multipath-tcp.org.http: Flags [S],

seq 270592032, win 29200, options [mss 1460,sackOK,

TS val 7359055 ecr 0,nop,wscale 7,

mptcp capable csum {0xaa7fa775d16fa6bf}], length 0

And unfortunately two times more…

11:24:08.228242 IP client.59499 > multipath-tcp.org.http: Flags [S],

seq 270592032, win 29200, options [mss 1460,sackOK,

TS val 7359556 ecr 0,nop,wscale 7,

mptcp capable csum {0xaa7fa775d16fa6bf}], length 0

11:24:12.236284 IP client.59499 > multipath-tcp.org.http: Flags [S],

seq 270592032, win 29200, options [mss 1460,sackOK,

TS val 7360558 ecr 0,nop,wscale 7,

mptcp capable csum {0xaa7fa775d16fa6bf}], length 0

At this point, Multipath TCP considers that there could be a middlebox that discards SYN segments with the MP_CAPABLE option on the path to reach the server and disables Multipath TCP.

11:24:20.244351 IP client.59499 > multipath-tcp.org.http: Flags [S],

seq 270592032, win 29200, options [mss 1460,sackOK,

TS val 7362560 ecr 0,nop,wscale 7], length 0

This segment immediately reaches the server that replies :

11:24:20.396718 IP multipath-tcp.org.http > client.59499: Flags [S.],

seq 3954135908, ack 270592033, win 28960, options [mss 1380,sackOK,

TS val 2522075773 ecr 7362560,nop,wscale 7], length 0

11:24:20.396748 IP client.59499 > multipath-tcp.org.http: Flags [.],

ack 1, win 229, options [nop,nop,TS val 7362598 ecr 2522075773], length 0

As shown by the trace, the middlebox, by dropping the SYN segments containing the MP_CAPABLE option has delayed the establishment of the TCP connection by fifteen seconds. This delay is controlled by the initial retransmission timer (one second in this example) and the exponential backoff applied by TCP to successive retransmissions of the same segments.

What can Multipath TCP users do to reduce this delay ?

- the best answer is to contact their sysadmins/network administrators and use a tool like tracebox to detect where packets with the MP_CAPABLE option are dropped and upgrade this middlebox

- if changing the network is not possible, the implementation of Multipath TCP in the Linux kernel can be configured to more aggressively fallback to regular TCP through the net.mptcp.mptcp_syn_retries configuration variable described on http://multipath-tcp.org/pmwiki.php/Users/ConfigureMPTCP. This variable controls the number of retransmissions for the initial SYN before stopping to use the MP_CAPABLE option (the default is 3)